The Difference-in-Difference (DiD) analysis is a powerful econometric tool with very practical uses.

The classical use of DID is to analyze the difference between two groups after some sort of treatment. Say, for example, that you want to compare scores of a college entrance exam, the SAT, for two groups of students, one group that participated in an SAT prep course (the treatment group) and one group that did not (the control group). Let’s further assume that all students took the SAT prior to the start of the course. And as a last assumption, enrollment in the prep course was not random. Students were required to express interest in the program.

In the above example, you could simply compare the final SAT scores of the treatment and control group to gauge the success of the enrichment program. For example, if the treatment group scored 1400 on average, but the control group scored 1200, the 200-point difference could be viewed as the effect of the program.

The problem with that approach is that it does not take other important factors into consideration such as time (maybe students just get better at taking the test over time) and starting position (maybe the treatment group scored higher on the first round of testing than the control group, providing a “head start” ). DiD separates the difference in SAT scores, 200 points in our example, into its constituent parts – time, starting position and the effect of treatment – quantifying the effect of each component. Of the additional 200 points the treatment group scored over the control, perhaps 50 of the points were due to the fact that the treatment group are just better test-takers, as evidenced by the original SAT scores, and would have scored on average 50 points higher even in the absence of treatment. DiD allows to you isolate the pure treatment effect.

To set up the analysis, we created two dummy/categorical variables that take on a value of either 0 or 1:

- Time: The time variable denoting whether the observation is pre-treatment (first SAT score) or post-treatment (second SAT score)

- Participant: Whether a student completed the prep course (the treatment)

We then created a variable called time_participant, which is the interaction between these two variables (i.e. the time variable multiplied by the participant variable).

We generally perform econometric analysis in Stata, but it is our understanding that this can also be done in R. Note that t0 refers to the first test and t1 refers to the second.

Variables under consideration:

Score: The SAT score. The dependent variable in this example

Time: The change in SAT score attributable to time, with the assumption being that students performed better the second time that they took the test. The coefficient on this variable represents the increase in score from t0 to t1 for students that did not complete the prep course.

Participant: The change in SAT score attributable to differences between the two groups prior to treatment. This coefficient quantifies the difference between participants and non-participants at t0, i.e. prior to the start of the prep course.

Time_Participant: The difference-in-difference variable. This coefficient displays the difference between program participants and non-participants at t1 while controlling for differences at t0.

The resulting regression equation is:

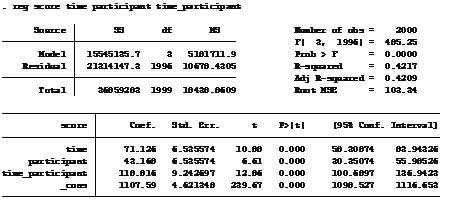

SAT Score = 1107.59 + 71.126time + 43.168participant + 118.816time_participant

In simpler terms, the above model predicts that all students are expected to score at least 1108 on the first test. Taking the test a second time is expected to boost scores by 71 points. As stated above, participation is not random. There is a selection bias built into the model in that those students that decided to participate in the program already had on average higher scores on the original test than the the students that chose not to participate. The difference in scores between those that chose to participate and those that did not PRIOR to participating in the program (i.e. at t0) is 43 points. And lastly, taking the test a second time and participating in the enrichment program together increases scores by 119 points.

Taken all together, a student that did not participate in the prep course should expect an SAT score of 1179 (constant plus coefficient on the time variable) on the second exam. While a student that participated in the course can expect to achieve 1341 on their second attempt, a difference of 162 points.

In conclusion, the DiD model is most useful in zeroing in on the effect of the treatment while excluding the effect of other variables: time in this example, but potentially also gender, age, or other factors that could affect outcomes. Students participating in the course scored 162 points higher than students that did not, but the effect of the treatment on its own was only 119 points.

An example of how this could be useful is a simple cost benefit analysis. Given the time and expense associated with developing and administering this program, let’s assume that the school set a threshold of 150 points. At 150 points or higher, the program is worth the cost, but below that threshold, it is not. Looking at the pure results, an expected increase of 162 points would exceed the threshold, triggering an expansion of the program. However, through difference-in-difference, administrators see that the program itself is only responsible for 119 points of that gain, thus falling short of expectations.

Note: The sample dataset and the Stata Do-file can be found on Github.